More about Bash

Don’t forget this week’s reading.

In this lecture, we continue our discussion of the Unix shell and its commands.

Goals

We plan to learn the following today:

- Redirection and pipes

- Special characters and quoting

- Standard input, output, and error

We’ll do this activity in today’s class:

- Sit with your group and experiment with shell pipelines with this assignment.

Redirection and pipes

To date we have seen Unix programs using default input and output - called standard input (stdin) and standard output (stdout) - the keyboard is the standard input and the display the standard output. The Unix shell is able to redirect both the input and output of programs. As an example of output redirection consider the following.

$ date > listing

$ ls -lR public_html/ >> listing

The output redirection > writes the output of date to the file called listing; that is, the ‘standard output’ of the date process has been directed to the file instead of the default, the display.

Note that the > operation created a file that did not exist before the output redirection command was executed.

Next, we append a recursive, long-format directory listing to the same file; by using the >> (double >) we tell the shell to append to the file rather than overwriting the file.

Note that the > or >> and their target filenames are not arguments to the command - the command simply writes to stdout, as it always does, but the shell has arranged for stdout to be directed to a file instead of the terminal.

The shell also supports input redirection.

This provides input to a program (rather than the keyboard).

Let’s create a file of prime numbers using output redirection.

The input to the cat command can come from the standard input (i.e., the keyboard).

We can instruct the shell to redirect the cat command’s output (stdout) to file named primes.

The numbers are input at the keyboard and CTRL-D is used to signal the end of the file (EOF).

$ cat > primes

61

53

41

2

3

11

13

18

37

5

19

23

29

31

47

53

59

$

Input redirection < tells the shell to use a file as input to the command rather than the keyboard.

In the input redirection example below primes is used as input to cat which sends its standard output to the screen.

$ cat < primes

61

53

41

2

3

11

13

18

37

5

19

23

29

31

47

53

59

$

Many Unix commands (e.g., cat, sort) allow you to provide input from stdin if you do not specify a file on the command line.

For example, if you type cat (CR) (carriage return) then the command expects input from the standard input.

Unix also supports a powerful ‘pipe’ operator for passing data between commands using the operator | (a vertical bar, usually located above the \ key on your keyboard).

Pipes connect commands that run as separate processes as data becomes available the processes are scheduled.

Pipes were invented by Doug McIlroy while he was working with Ken Thompson and Dennis Ritchie at AT&T Bell Labs. (As I mentioned earlier, Doug has been an adjunct professor here at Dartmouth College for several years now.) In this two-page interview, at the middle of the third column, Doug tells how pipes were invented and the

|character selected as the operator. Pay special attention to the next paragraph: the Dartmouth Time Sharing System had something similar, even earlier!

Pipes are a clever invention indeed, since the need for separate temporary files for sharing data between processes is not required.

Because commands are implemented as processes, a program reading an empty pipe will be suspended until there is data or information ready for it to read.

There is no limit to the number of programs or commands in the pipeline.

In our example below there are four programs in the pipeline all running simultaneously waiting on the input:

$ sort -n primes | uniq | grep -v 18 | less

2

3

5

11

13

19

23

29

31

37

41

47

53

59

61

$

What is the difference between pipes and redirection?

Basically, redirection (>,>>,<) is used to direct the stdout of command to a file, or from a file to the stdin of a command.

Pipes (|) are used to redirect the stdout to the stdin of another command.

This operator allows us to ‘glue’ together programs as ‘filters’ to process the plain text sent between them (plain text between the processes - a nice design decision).

This supports the notion of reuse and allows us to build sophisticated programs quickly and simply. It’s another cool feature of Unix.

Notice three new commands above: sort, uniq, and grep.

sortreads lines from from stdin and outputs the lines in sorted order; here-ntells `sort to use numeric order (rather than alphabetical order);uniqremoves duplicates, printing only one of a run of identical lines;grepprints lines matching a pattern (more generally, a regular expression); here,-vinverts this behavior: print lines that do not match the pattern. In this case, the pattern is simply18andgrepdoes not print that number as it comes through.

And, as we saw last time, less pauses the output when it would scroll off the screen.

Note that the original file - primes - is not changed by executing the command line above.

Rather, the file is read in by the sort command and the data is manipulated as it is processed by each stage of the command pipe line.

Because sort and cat are happy to read its input data from stdin, or from a file given as an argument, the following pipelines all achieve the same result:

$ sort -n < primes | uniq | grep -v 18 | less

$ sort -n primes | uniq | grep -v 18 | less

$ cat < primes | sort -n | uniq | grep -v 18 | less

$ cat primes | sort -n | uniq | grep -v 18 | less

Which do you think would be most efficient?

For commands that can directly take file names as arguments and read file content, we generally do not need explicit input redirection (<).

Another pipeline: How to get the list of existing usernames on a machine?

$ cut -d : -f 1 /etc/passwd | sort > usernames.txt

See

man cutto understand what the first command does.

Another example: what is the most popular shell? Try each of these in turn:

$ cut -d : -f 7 /etc/passwd

$ cut -d : -f 7 /etc/passwd | less

$ cut -d : -f 7 /etc/passwd | sort

$ cut -d : -f 7 /etc/passwd | sort | uniq -c

$ cut -d : -f 7 /etc/passwd | sort | uniq -c | sort -n

$ cut -d : -f 7 /etc/passwd | sort | uniq -c | sort -nr

Standard input, output and error

As we learned above, every process (a running program) has a standard input (abbreviated to stdin) and a standard output (stdout).

The shell sets stdin to the keyboard by default, but the command line can tell the shell to redirect stdin using < or a pipe.

The shell sets stdout to the display by default, but the command line can tell the shell to redirect stdout using > or >>, or to a pipe.

Each process also has a standard error (stderr), which most programs use for printing error messages.

The separation of stdout and stderr is important when stdin is redirected to a file or pipe, because normal output can flow into the file or pipe while error messages reach the user on the screen.

Inside the running process these three streams are represented with numeric file descriptors:

0: stdin

1: stdout

2: stderr

You can tell the shell what or where to redirect using these numbers; > is shorthand for 1> and < is shorthand for 0<. Redirecting only the error output (file descriptor 2) is 2>.

Suppose on the plank server, I am using the cat command to view the content of the primes file and jokes.txt file, the latter of which does not exist in my current directory.

$ cat primes jokes.txt

61

53

41

2

3

11

13

18

37

5

19

23

29

31

47

53

59

cat: jokes.txt: No such file or directory

$

The numbers you see are the normal output as they are the content of the primes file. The last line is an error output since jokes.txt does not exist and running cat command throws an error.

Next I can redirect the normal output to a file called normal and the error output to a file named errors:

$ cat primes jokes.txt > normal 2> errors

$

Now there is nothing printed on the screen, but if you check the normal and errors files, you will see the output there!

If you want to ignore the error output entirely, we can redirect it to a place where all characters go and never return!

$ cat primes jokes.txt > normal 2> /dev/null

$

The file called /dev/null is a special kind of file - it’s not a file at all, actually, it’s a ‘device’ that simply discards anything written to it.

(If you read from it, it appears to be an empty file.)

Special characters

There are a number of special characters interpreted by the shell - spaces, tabs, wildcard characters for filename expansion, redirection symbols, and so forth. Special characters have special meaning and cannot be used as regular characters because the shell interprets them in a special manner. These special characters include:

& ; | * ? ` " ' [ ] ( ) $ < > { } # / \ ! ~

We have already used several of these special characters. Don’t try to memorize them at this stage. Through use, they will become second nature. We will just give some examples of the ones we have not discussed so far.

Quoting

If you need to use one of these special characters as a regular character, you can tell the shell not to interpret it by escaping or quoting it.

To escape a single special character, precede it with a backslash \; earlier we saw how to escape the character * with \*.

To escape multiple special characters (as in **), quote each: \*\*.

You can also quote using single quotation marks such as '**' or double quotation marks such as "**" - but these have subtlety different behavior.

You might use this form when quoting a filename with embedded spaces: "Homework assignment".

You will often need to pass special characters as part of arguments to commands and other programs - for example, an argument that represents a pattern to be interpreted by the command; as happens often with find and grep.

There is a situation where single quotes work differently than double quotes.

If you use a pair of single quotes around a shell variable substitution (like $USER), the variable’s value will not be substituted, whereas it would be substituted within double quotes:

$ echo "$LOGNAME uses the $SHELL shell and his home directory is $HOME."

f001cxb uses the /bin/bash shell and his home directory is /thayerfs/home/f001cxb.

$ echo '$LOGNAME uses the $SHELL shell and his home directory is $HOME.'

$LOGNAME uses the $SHELL shell and his home directory is $HOME.

$

Example 1. Double-quotes are especially important in shell scripts, because the variables involved might have been user input (a command-line argument or a keyboard input) or might have be a file name or output of a command; such variables should always be quoted when substituted, because spaces (and other special characters) embedded in the value of the variable can cause confusion. Thus:

directoryName="Homework three"

...

mkdir "$directoryName"

Try it!

Example 2. Escapes and quoting can pass special characters and patterns passed to commands.

Suppose I have email addresses of our learning fellows in LFs.txt, one per line.

$ cat LFs.txt

Jack A. McMahon <Jack.A.McMahon.23@dartmouth.edu>

Cleo De Rocco <Cleo.M.De.Rocco.24@dartmouth.edu>

Marvin Escobar Barajas <Marvin.Escobar.Barajas.25@dartmouth.edu>

Andrea S. Robang <Andrea.S.Robang.24@dartmouth.edu>

Samuel R. Barton <Samuel.R.Barton.25@dartmouth.edu>

Rehoboth K. Okorie <Rehoboth.K.Okorie.23@dartmouth.edu>

$

I can make the addresses in one line separated by comma, using the tr command:

$ tr "\n" , < LFs.txt

Jack A. McMahon <Jack.A.McMahon.23@dartmouth.edu>,Cleo De Rocco <Cleo.M.De.Rocco.24@dartmouth.edu>,Marvin Escobar Barajas <Marvin.Escobar.Barajas.25@dartmouth.edu>,Andrea S. Robang <Andrea.S.Robang.24@dartmouth.edu>,Samuel R. Barton <Samuel.R.Barton.25@dartmouth.edu>,Rehoboth K. Okorie <Rehoboth.K.Okorie.23@dartmouth.edu>,

The tr command translates each instance of the character given in the first argument (\n) to the character given in the second argument (,). \n is a single special character in Unix and is called ‘newline’. It defines the end of one line and the beginning of the next. We used double-quotes to include it since \ is a special character in bash and we need to escape it. Without the quotes, we will need to do \\n.

It’s important to note that tr command does not take file names as arguments, so we have to use input redirection for the command to take the content from LFs.txt.

Example 3.

An even more powerful filtering tool - the stream editor called sed - allows you to transform occurrences of one or more patterns in the input file(s):

sed pattern [file]...

Here are some examples with the LFs.txt file:

$ # remove Jack and excess white space

$ sed -e '/Jack/d' -e 's/ */ /g' LFs.txt

Cleo De Rocco <Cleo.M.De.Rocco.24@dartmouth.edu>

Marvin Escobar Barajas <Marvin.Escobar.Barajas.25@dartmouth.edu>

Andrea S. Robang <Andrea.S.Robang.24@dartmouth.edu>

Samuel R. Barton <Samuel.R.Barton.25@dartmouth.edu>

Rehoboth K. Okorie <Rehoboth.K.Okorie.23@dartmouth.edu>

$ # remove Jack, remove names, make comma-sep list of addresses

$ sed -e '/Jack/d' -e 's/.*<//' -e 's/>.*/,/' LFs.txt

Cleo.M.De.Rocco.24@dartmouth.edu,

Marvin.Escobar.Barajas.25@dartmouth.edu,

Andrea.S.Robang.24@dartmouth.edu,

Samuel.R.Barton.25@dartmouth.edu,

Rehoboth.K.Okorie.23@dartmouth.edu,

$

The above examples use the -e switch to sed, which allows one to list more than one pattern on the same command.

A few quick notes about sed’s patterns:

ddeletes lines matching the patternpprints lines matching the pattern (useful with-n)ssubstitutes text for matches to the pattern.

I also used regular expressions to specify patterns, including excess white space ( *), any string before < character (.*<), and any string after ‘>’ character (>.*). Please refer to this summary on regular expression so that you know how to use it too!

Example 4.

I saved a list of students enrolled in CS50 in the file /thayerfs/courses/22spring/cosc050/workspace/students.txt on the plank server.

Each line is of the form First.M.Last.xx@Dartmouth.edu.

Let’s suppose you all decide to move to Harvard.

$ sed s/Dartmouth/Harvard/ /thayerfs/courses/22spring/cosc050/workspace/students.txt

...

$ sed -e 's/Dartmouth/Harvard/' -e 's/\.[0-9][0-9]//' /thayerfs/courses/22spring/cosc050/workspace/students.txt

...

The second form removes the dot and two-digit class number (the pattern is again specified using regular expression).

Notice how I quoted those patterns from the shell, and even escaped the dot from sed’s normal meaning (with s, sed is looking for a pattern expressed by a regular expression, where dot matches any character in regular expression) so sed would look for a literal dot in that position.

Here’s another fun pipe: count the number of students from each class (leveraging the class numbers in email addresses). Here ^$ is a pattern described by regular expression, where ^ and $ represents the beginning and the end of a line, thus ^$ means an empty line.

$ tr -c -d 0-9\\n < /thayerfs/courses/22spring/cosc050/workspace/students.txt | sed 's/^$/other/' | sort | uniq -c | sort -nr

35 25

24 24

15 23

4 22

1 other

$

See man sed or the sed FAQ for more info.

You’ll want to learn a bit about regular expressions, which are used to describe patterns in sed’s commands; see sed regexp info.

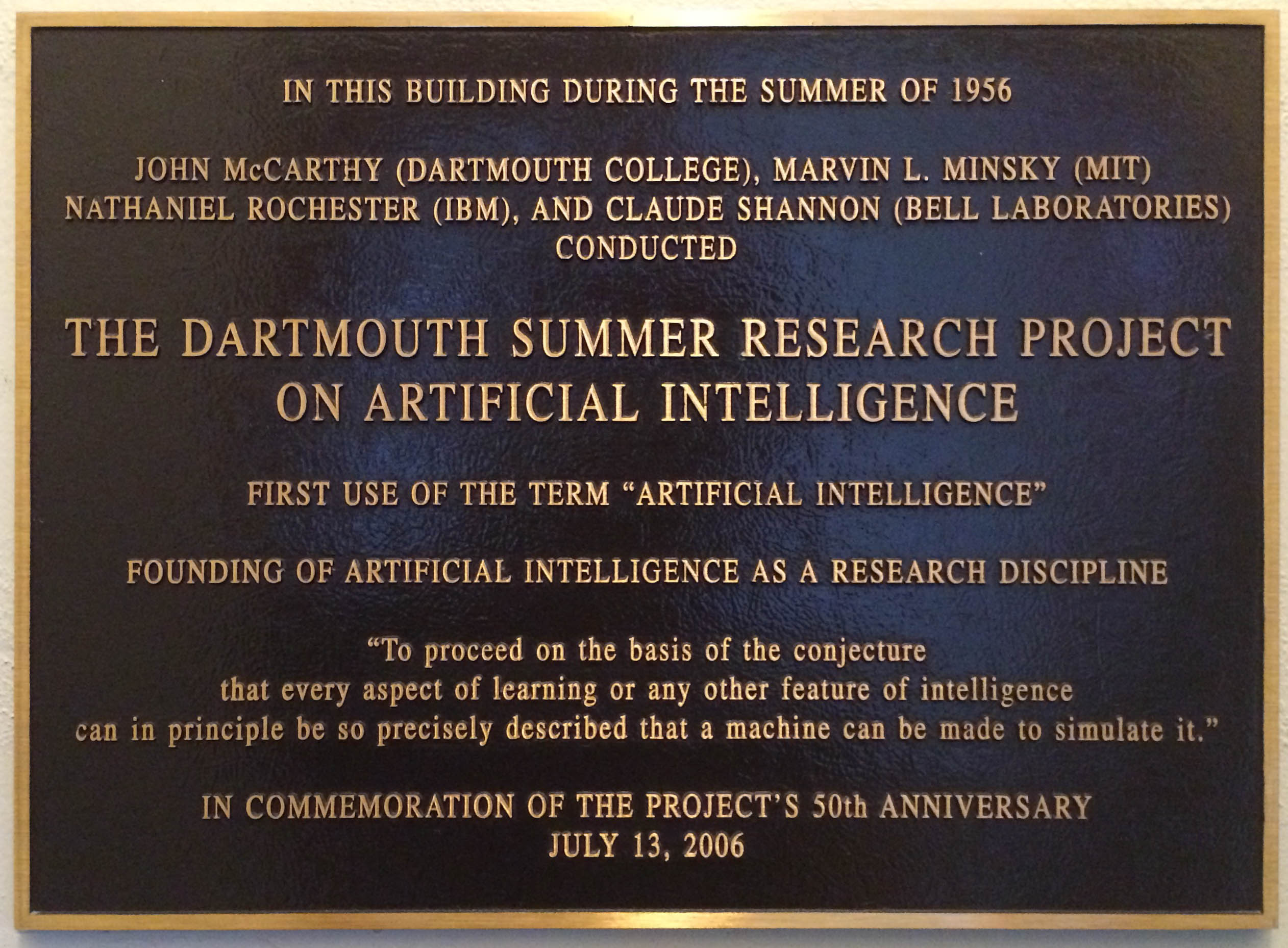

Historical note

Another important moment in computing history, that happened at Dartmouth!

Can you find the building?

Other things

There’s never enough time in class to show you everything.

Compressing and archiving files

It is often useful to bundle several files into a compressed archive file.

You may have encountered files like this on the Internet - such as files.zip, something.tar, or somethin-else.tgz.

Each packs together several files - including directories, creation dates, access permissions, as well as file contents - into one single file.

This is a convenient way to transfer large collections of files.

On Unix it is most common to use the tar utility (short for tape archive - from back when we used tapes) to create an archive of all the files and directories listed in the arguments and name it to something appropriate.

We often ask tar to compress the resulting archive too.

Given a directory stuff, you can create (c) a compressed tar archive (aka, a “tarball”), and then list (t) its contents.

The f refers to a file and the z refers to compression.

$ mkdir stuff

$ echo > stuff/x

$ tar cfz stuff.tgz stuff

98.8%

$ tar tfz stuff.tgz

stuff/

stuff/x

$

The command leaves the original directory and files intact.

Notice that tar has an unconventional syntax for its switches - there is no dash - before them.

To unpack the archive,

$ tar xfz stuff.tgz

In short, c for create, t for type (list), x for extract.

The f implies that the next argument is the tarball file name.

The z indicates that the tarball should be compressed.

By convention, a tarball filename ends in .tar if not compressed, .tgz if compressed.

CS50 Spring 2022

CS50 Spring 2022