Multi-class Object Categorization in Images

CS 34/134 Project Milestone:

Lu He, Tuobin Wang

May 11, 2009

Accomplishments that have been achieved so far:

1.GUI Design:

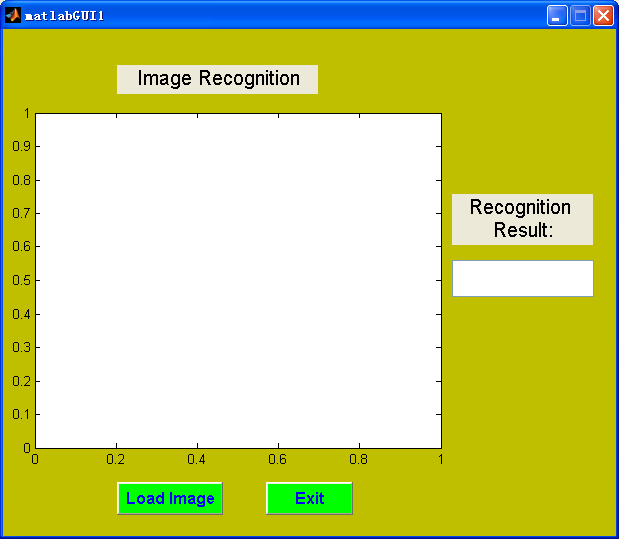

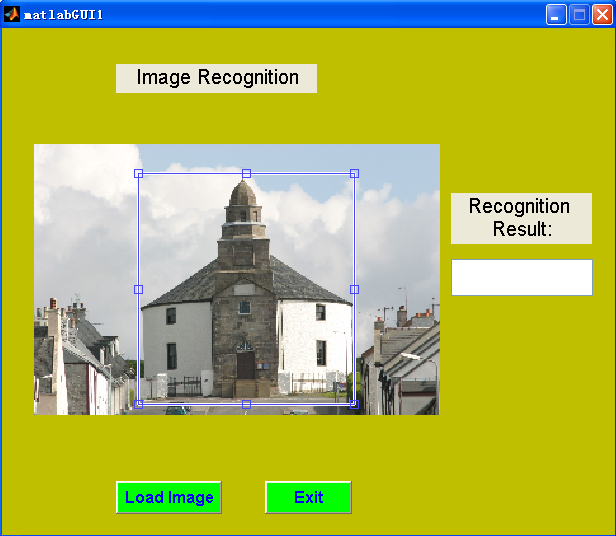

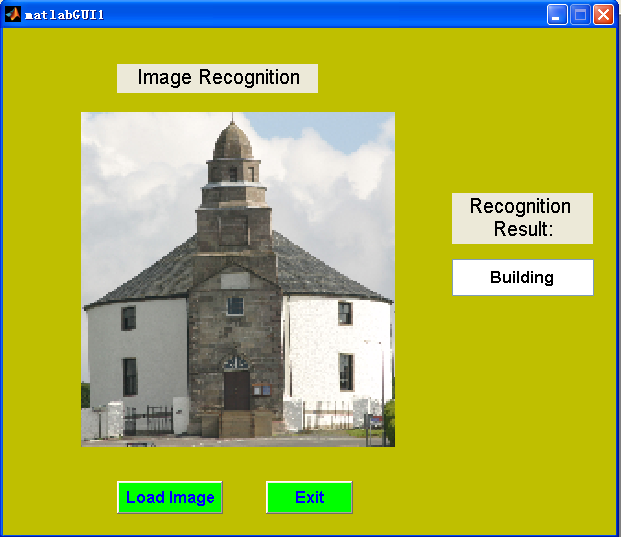

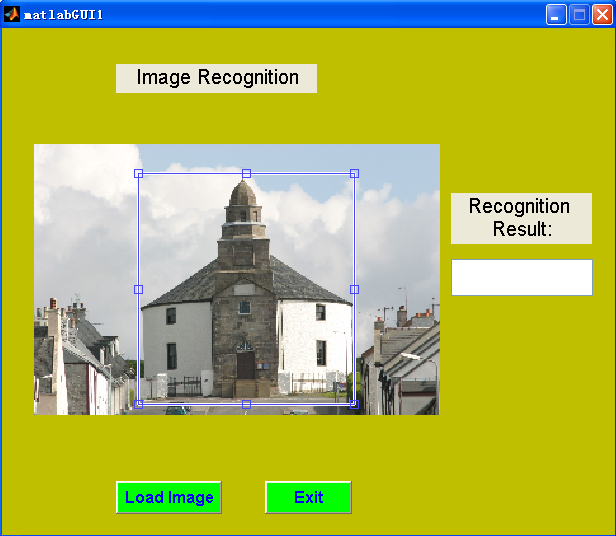

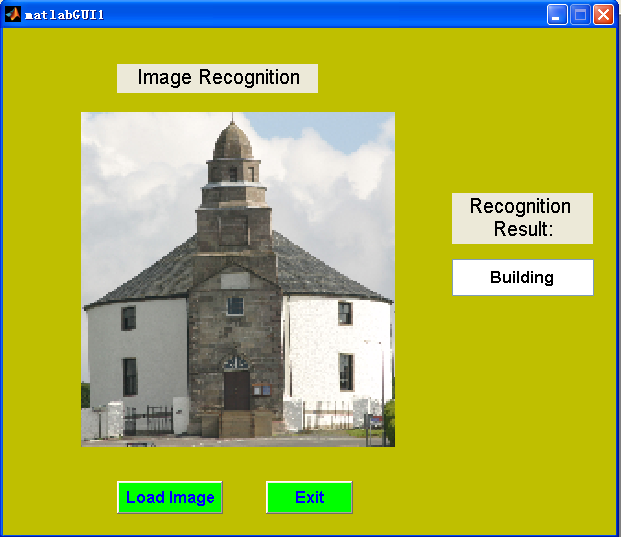

We accomplished a simple GUI of our project (fig1), in which user can load an image from his/her local computer (fig2) and select a region (fig3), then our final project can show the recognition result in the right textfielg (fig4) automatically(To be done).

|

|

| Fig1: Simple GUI | Fig2: Load an image |

|

|

| Fig3: Select a region | Fig4: Image recognition |

2.

Preprocessing of training images:

In our

project, each training image is changed to CIE L, a, b color model, and

then is

convolved with 17 filter-banks to generate features. The filter-banks

are made

of 3 Gaussians, 4 Laplacian of Gaussians (LoG) and 4 first order

derivatives of

Gaussians. While the three Gaussian kernels are applied to each CIE L,

a, b

channel,each with three different value σ = 1,2,4, the four LoGs

are applied to

the L channel only,each with four different value σ =

1,2,4,8, and the

four derivative of Gaussians kernels were divided into two x- and y-

aligned

set, each with two different value σ = 2,4. Then, we get each

pixel in the

image associated with 17-dimensional feature vector.

3. K-mean

cluster:

After all

training images are preprocessed, the whole set of feature vectors for

all the

training images are then clustered by the unsupervised K-mean algorithm

with a

large initial value K (in the order of thousands) and by calculating

Mahalanobis

distance between features during clustering. Hence, after doing k-mean

on all

the training images, we get k clusters finally.

4. Universal

visual dictionary(UVD):

After clustering, we calculate the covariance for each cluster, then the set of these K clusters and the associate covariances constitute the initial UVD.

5. Building

histograms

|

|

|

| Original Image | Ground Truth |

In the above

figures, the left one is an original image from the training dataset

and the

right one is the ground truth correspondingly. As you may notice, the

ground

truth figure has been separated manually into five regions and all

marked with

one of three colors (representing three objects).

After we

create the initial UVD, we build histograms over this UVD for

every training region which has been marked in the ground truth.

Things to be done:

Since the size of the initial UVD K is very large, our goal is to use a mapping to merge bins in the initial UVD to generate the final UVD with size T (T<<K), we decide to use the model and the algorithm proposed in [1], which makes our mapping results both compact and discriminative.

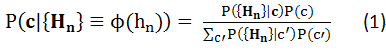

Our model is

to maximize the posterior probability of ground truth labels given all

the

histograms P(c|{H})

by using Bayes rules(1). The term in the

denominator acts to penalize the mappings which reduce discriminative

and the

numerator favors mappings which lead to intra-class compactness. From

(1), the

key is to compute the conditional probability of all the histograms

given the

ground truth labels,P({ H}|c). The assumption to our

model is

that we model the histogram for each class using a Gaussian

distribution and we

apply the variance stabilizing transformation of a multinomial to make

the

variance constant rather than linearly dependent on the mean. For each

class,

we define a prior. Then multiply them up to compute P({H}|c).

Our algorithm is to iteratively merge a pair of textons in UVD that most improves the value P(c|{H}), if we cannot find this pair, then stop and we get the final UVD.

For the timeline we submitted in our proposal, we think our project is

on

track. In our schedule, we need to program in another one week and then

finish

coding.

Reference:

[1]. John Winn, Antonio Criminisi, Thomas Minka, Object

Categorization by Learned Universal Visual Dictionary, in

Proc. IEEE

Intl. Conf. on Computer Vision (ICCV)., Jan. 2005