Reinforcement Learning in Tic-Tac-Toe Game

and Its Similar Variations

Group Members: Peng Ding and Tao Mao

Thayer School of Engineering at Dartmouth College

1. What Is Tic-Tac-Toe?

Tic-tac-toe is traditionally a popular game among kids: in its 3 by 3 board two persons alternately place one piece at a time; one wins when he or she has three pieces of his or her own in a row, whether horizontally, vertically, or diagonally.

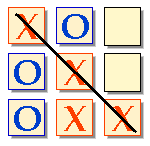

Figure 1 Winning situation for player "X" in Tic-tac-toe

2. Goals of the Project

We will apply reinforcement learning, specifically temporal-difference learning, to evaluate each state's numerical value, based on which an agent chooses its next move. Clearly speaking, the "state" here is an "afterstate", defined as a state right after the agent's move.

3. Methods

3.1 Representation of State Space

Multi-dimensional vectors are used to describe the state space of each situation. For example, as shown in Figure 1, we give it the following representing vector

s=[1, 2, 0, 2, 1, 0, 2, 1, 0]'

where 1 indicates player "X" places a piece in this location, 2 indicates player "O" places a piece in this location, and 0 indicates this is an empty location.

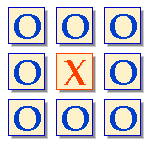

Since, in this representation, there are many impossible states as shown in Figure 2, we can apply harsh table to memorize feasible states instead of keeping a very large array which has lots of unused entries.

Figure 2 Impossible state occurring in vector representation

3.2 Introduction to Reinforcement Learning [1]

Reinforcement learning (RL) is an unsupervised machine learning technique, which "learns" from the interactive environment's rewards to approximate values of state-action pairs and maximize the long-term sum of rewards. It has four essential components: state set S, action set A, rewards from the environment R, and values for state-action pairs V.

3.3 Temporal-Difference (TD) Method

A little different from the standard RL and specifically for board game applications, we combine state set S and action set A into a new "afterstate" set S. The reasons are: (1) in board games, a state after a move is deterministic; (2) Different "prestate" and action may come to the same "afterstate", thus possibly holding redundancy in many state-action pairs.

We will use temporal-difference method, one of reinforcement learning techniques, to approximate state values by updating values of visited states after each training game.

![]()

where s is the current state, s' is the next state and V(s) is a state value for state s and ![]() is the learning rate, ranging within (0, 1].

is the learning rate, ranging within (0, 1].

4. Datasets

For simple games such as Tic-tac-toe, two compute agents will play against each other and learn game strategies from simulated games. This training method is called self-play, which has several advantages such as that an agent has general strategies rather than those associated with a fixed opponent. However, it would have a slow convergence rate, especially in the early learning stage [2].

Thus, we will also consider using human-computer games, or games of our computer agent vs. other existing agents to obtain sophisticated game strategies if training results of the above datasets are not robust enough.

5.Timeline

(1) Game framework including human-computer interface and basic machine learning core;

(2) Implementing basic Tic-tac-toe game (before "milestone");

(3) Write up milestone report;

(4) Implementing other games variated from Tic-tac-toe, such as 4-connect;

(5) Write up final report.

References

[1] R. Sutton and A. Barto. Reinforcement Learning: An Introduction, MIT Press, Cambridge, MA: pp. 10-15, 156. 1998

[2] I. Ghory. Reinforcement learning in board games, Technique report, Department of Computer Science, University of Bristol. May 2004