Our task is to classify 120 breeds of dog appropriately using machine learning methods. To implement this, we implement several classification method such as k-NN and multi-class SVM. In this milestone, we are introducing our experiment results. It contains not only test results, but also our analysis of the results

- About the Dataset

- Our dataset contains 12000 examples with 120 breeds (for 100 examples). We used both kernelized matrix and non-kernelized matrix. The kernelized matrix has been applied histogram intersection kernel. The number of features of the former is 12000, the latter is 5376.

- Our dataset contains 12000 examples with 120 breeds (for 100 examples). We used both kernelized matrix and non-kernelized matrix. The kernelized matrix has been applied histogram intersection kernel. The number of features of the former is 12000, the latter is 5376.

- K-NN classifier

- We implemented k-NN classifier for testing. k-NN method easy to implement so we could build several k cases (it is known that its perfermance is fairly good) and compare the accuracy to our main SVM method.

- We implemented k-NN classifier for testing. k-NN method easy to implement so we could build several k cases (it is known that its perfermance is fairly good) and compare the accuracy to our main SVM method.

- Multi-class SVM(one-vs-one)

- Tested multi-class SVM classifier could classify 12 of 120 total dog breeds.

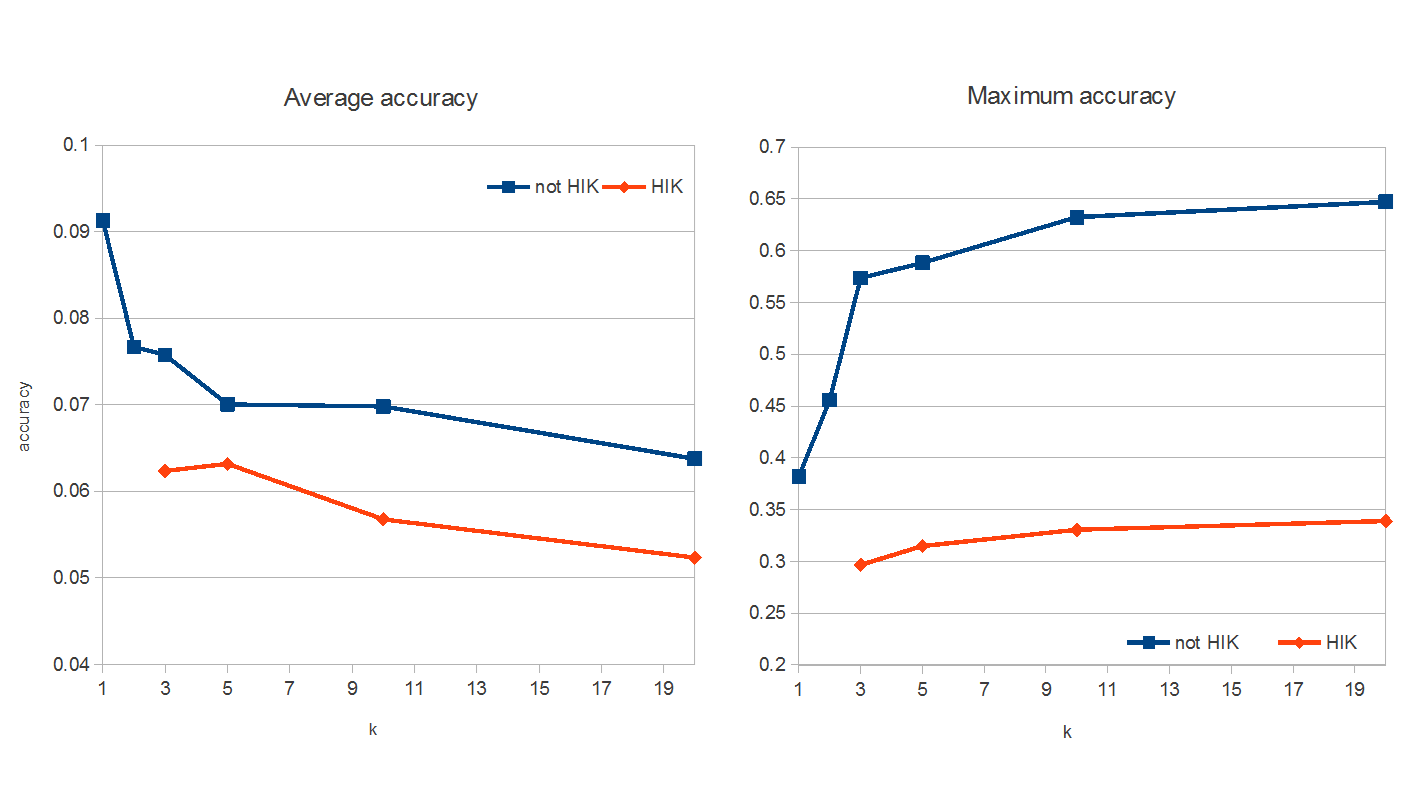

For using k-NN method, we used the datasets which is applied the histogram kernel intersection and not applied it and we used the whole train data (12000 examples) and test data (8580 examples) and we compared the results.

The average accuracy tends to be higher as k is smaller for both datasets. The maximum accuracy increase as k is higher. We can think the accuracy for each class more distuributed when k is small. And we can also observe that for k-NN method, choosing the dataset which is not applied the histogram intersection kernel (it is represented as blue line on the figures) might be more appropriate.

As you can see, for k = 1, we got 9.01% average accuracy.

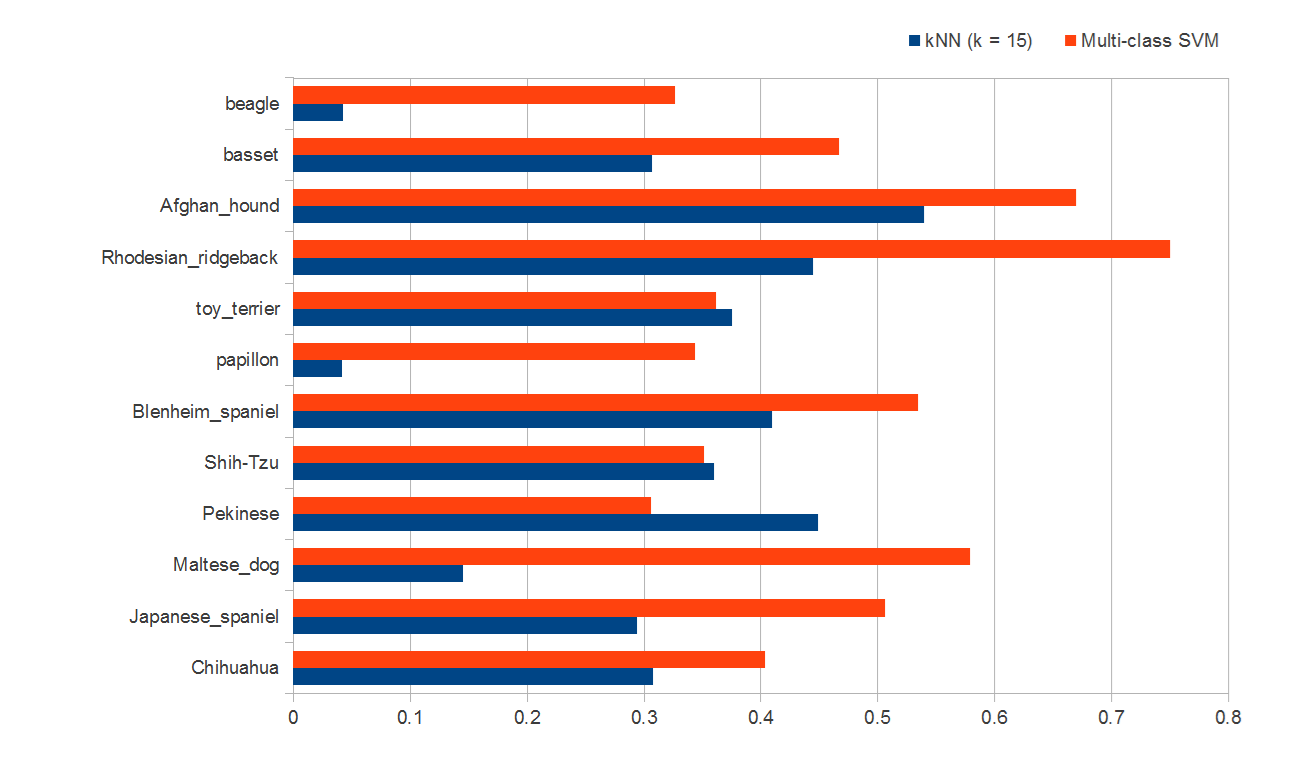

The above figure is the result of comparing k-NN and multi-class SVM(one-vs-one). We used only 12 of 120 dog breeds dataset. (k-NN result derived from raw data which means that the set was not expanded by the histogram intersection kernel). We got the best result among the k-NN when we picked k = 1 from the above experiment. However the reason we posted k=15 case in here is k=15 case showed better performance when the dataset was only 12 breeds. As we can see, the multi-class SVM (48% accuracy) showed better performance compare to k-NN method (30% accuracy). Thus, we might expect Multi-class SVM might show better performance in 120 breeds classification too.

- We will implement decision trees(strong classifier ) using binary SVM(strong classifier) as a method to split nodes in the trees. We expect better performance and accuracy to these combination of two strong classifier.

- The each tree in the random forest gives a classification and "votes" for that class.

5 / 12 : Build decision tree.

5 / 18 : Complete test Decision trees with SVM classifier.(Test with first 12 breeds set).

5 / 22 : Random Forest implement.

5 / 27 : Summerize up

- [1] Stanford Dogs dataset, Stanford Vision Lab

- [2] Aditya Khosla, Nityananda Jayadevaprakash, Bangpeng Yao and Li Fei-Fei. Novel dataset for Fine-Grained Image Categorization. First Workshop on Fine-Grained Visual Categorization (FGVC), IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2011.

- [3] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li and L. Fei-Fei, ImageNet: A Large-Scale Hierarchical Image Database. IEEE Computer Vision and Pattern Recognition (CVPR), 2009