COSC174 Milestone

Machine Learning with Prof. Lorenzo Torresani

Rui wang, Tianxing Li, and Jing Li

Feb 19th, 2013

'Dartmouth Daily Updates'(D2U) is a daily email news digest sent to faculty, staff and students at 1am, which provides us information about what is happening on campus, such as academic seminars, art performances, sports games, traditional events, etc.. However, the email digest is usually very long and the information it contains is not categorized to satisfy people's different interest. Imagine you are about to go to bed at 1am, or you quickly check your email before starting your work in the early morning, and we usually delete it without paying any attention. Unfortunately, we miss a lot of useful information.

Based on this, we developed our goal which is to provide a dreaming application that classifies the emails to a predefined set of generic class, such as talks, arts, academic, medical, free food, etc., for people to get their interested information at their first sight.

Since most of the events within D2U are written as short text messages, which do not provide sufficient word occurrences, traditional classification methods, such as 'Bag-of-words', could not classify the information precisely and efficiently. To solve this limitation, our novel classification method not only gathers the information from the text messages, but also extracts additional background information including time of the events, sender profile and URL that belong to the college life scenarios.

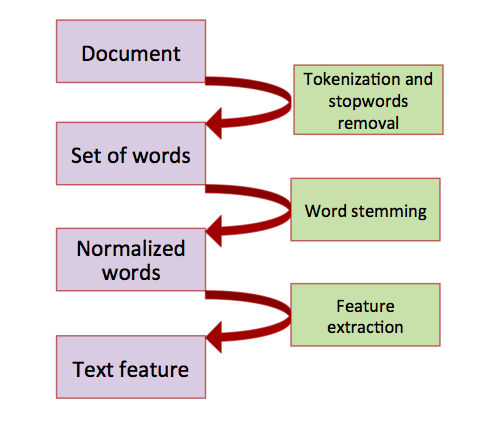

The purpose of this step was to preprocess text and represent each document as a feature vector. In details, for each event from D2U email, we did the following subtasks:

1. Data Acquisition:

In this step, we wrote a Python script to automatically scan the website's link that belongs to each event from D2U database. The content within the websites was extracted and used as our raw data.

2. Data preprocessing:

|

The purpose of this step was to preprocess text and represent each document as a feature vector. In details, for each event from D2U email, we did the following subtasks:

1) A word splitter was used to separate the whole text into individual words. For example, the input 'Dartmouth Daily Updates Classifier' was splitted into four words, 'Dartmouth', 'Daily', 'Updates', and 'Classifier', separately.

2) Common words that are usually useless for text classification were removed. In this case, words such as 'a', 'the', 'I', 'he', 'she', 'is', 'are', etc., were removed.

3) Porter stemmer was used to normalize the words that were derived from the same root. For instance, by doing word stemming, both of 'classifying' and 'classified' were changed to 'classify'.

4) Feature extraction. In our project, each word was used as a feature, and TF-IDF was further calculated as the value of the features.

3. Ground truth labeling

Our dataset covers the latest 90 days' emails from D2U, which has a total size of 803 events. We then manually labeled all those events using following 15 different categories. The pre-defined labels were mapped into two groups, with differently represented forms(Group 1) and events’ contents(Group 2). The labels are shown below:

Group 1 ="1 TALK 2 MEETING 3 PERFORM 4 EXHIBITION 5 GAME".

Group 2 ="11 ART 12 HOUSING 13 JOBS 14 MED 15 SPORTS 16 IT 17 ACADEMIC 18 PARTY 19 FREE_FOOD 0 OTHER".

4. Multi-Label Classification

There are two categories of multi-label classification methods: a) problem transformation methods,

and b) algorithm adaption methods. We deployed a problem transformation method to solve our

problem.

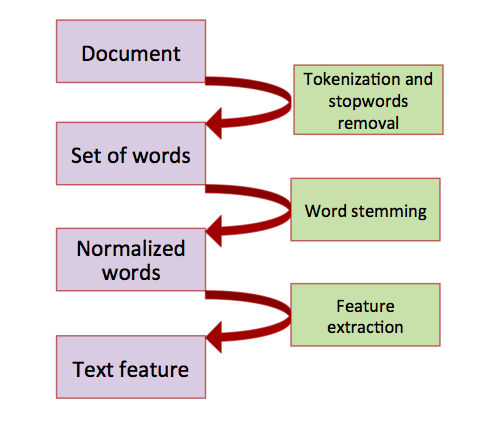

Our basic idea is to transform a multi-label classification problem into a set of binary classification

problems. For better illustration, we introduce an example which has 3 documents, each has 2

labels. We will train 4 binary classifier for each of these labels.

| Doc. | TALK | PERFORM | ART | ACADEMIC | |

| 0 | 1 | 0 | 1 | 0 | |

| 1 | 1 | 0 | 0 | 1 | |

| 2 | 0 | 1 | 1 | 0 | |

For each classifier, we generate different set of training set based on the label of each documents. If we are training classifier for label A, we select documents which has label A as positive cases and documents which has other labels as negative cases. Take the case illustrated in the above table as an example, when we train the model for label TALK, we select doc 0 and doc 1 as positive cases, and doc 2 as negative cases; for label ART, we select doc 0 and doc 2 as positive cases, and doc 1 as negative cases.

In single-label classification problems, each instance only have one label. Both single-class classifiers and multi-class classifiers can be used to solve this kind of problems. In our problem, however, each instance may have multiple labels. Multi-class classifiers will not work properly in multi-label classification problems since one document’s labels may confuse the classifier. For instance, if a document has label TALK and ART, the training set will need to contain 2 identical documents with different labels, which will cause some trouble for the classifier to distinguish different labels. The only possible way to use multi-class classification method to solve multi-label classification problem is to take the combinations of the labels as new set of labels. In that case, if we have n labels, after the label combination, we will have ∑ k=1n( n k) labels. The number of labels is too large which may have an impact on the performance of the classifier.

Our classifier training pipeline is shown in the following graph:

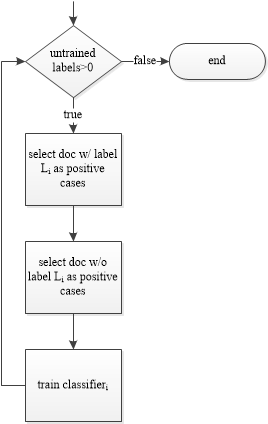

To find all labels for a given document, it should go through every classifier. Every classifier will

decide if it belongs to a specific label. We can get our final classification result after combining the

results from all classifiers.

5. Test and validation:

As shown above, both multi-label and six different single-label classifiers(shown below) were built to predict the label.

1) L2-regularized logistic regression (primal)

2) L2-regularized L2-loss support vector classification (dual)

3) L2-regularized L1-loss support vector classification (dual)

4) Support vector classification by Crammer and Singer

5) L1-regularized L2-loss support vector classification

6) L2-regularized logistic regression (dual)

7) SVM

To validate and visualize our results, we used 5-cross validation and both the precision and recall of models was calculated. The results are shown in the next section.

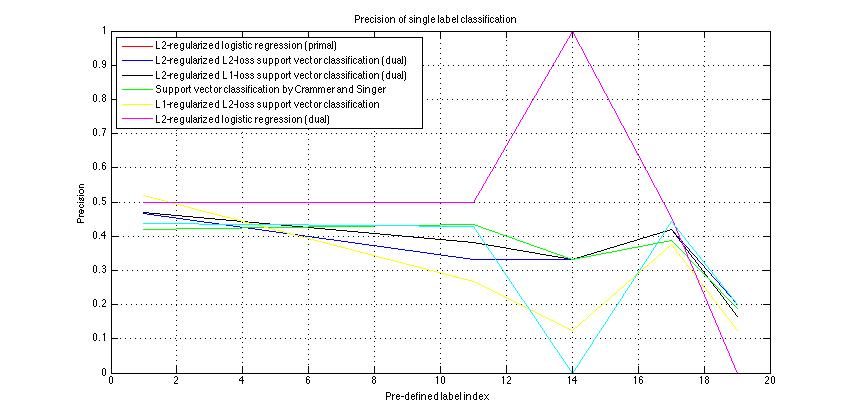

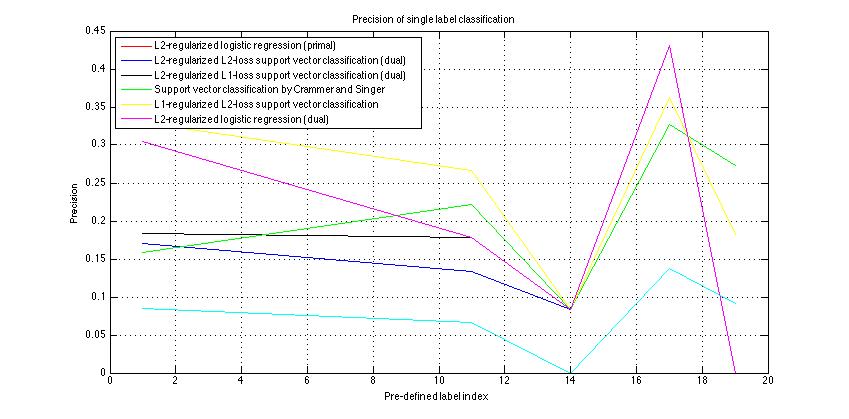

1. Performance of single label classification:

|

|

Based on our result, different classifiers have better/worse performance on different labels. Classifiers achieved better performance on labels- TALK, ART and ACADEMIC than the rest 3 labels. After further investigation on our training set, we found there were more documents with one or more labels under these three categories, which indicates that the size of our training set has a big impact on the classification performance.

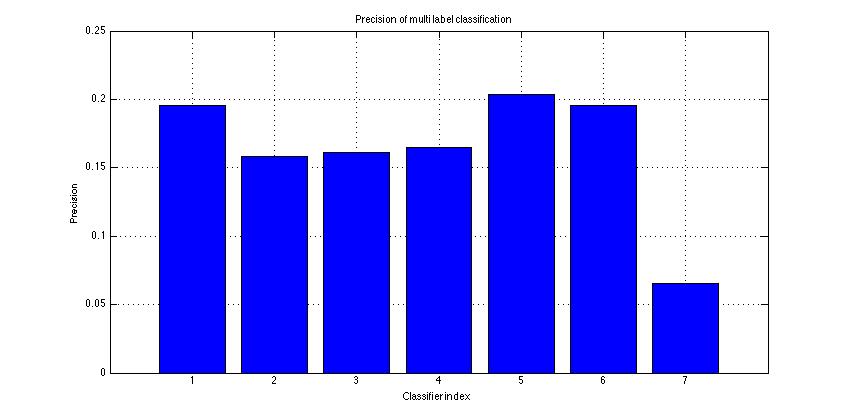

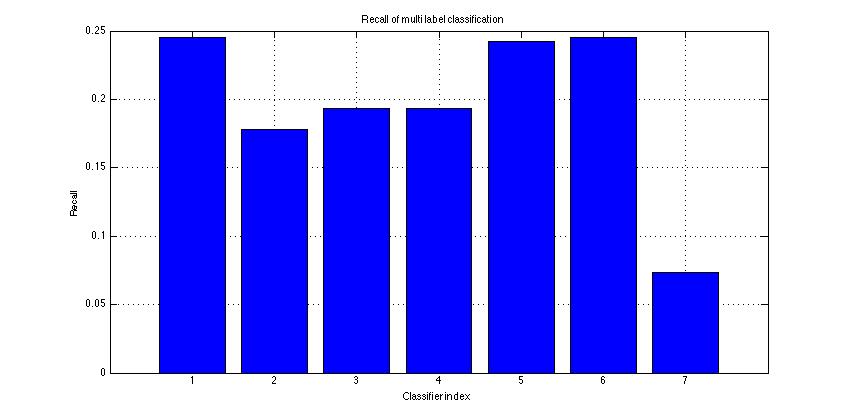

2. Performance of multi label classification:

|

|

Since our multi-label classification method was built on a set of classifiers, the performance of the multi-label classification had a huge correlation with the performance of single-label classification method it was based on. We can see it clearly from the result.

One big difficulty we came though was the limited data size. Although we tried our best to label as many emails as possible, since manually labeling each email event takes about 1-2 minute and D2U database contains only 90 days emails with 803 events, those 803 events was used as our dataset.

Besides that, during the ground truth labeling process, we found that some events without detailed description were difficult to be classified by our classifiers. For example, some event contains only one-sentence description of an article or film and lacks background information. In those cases, text feature may not be good way to do the classification. To solve this problem, more features, such as URL, will be added besides the text feature. The URLs of the event and sender information contain a lot of useful background knowledge. By looking at a URL, we may find the original departments or schools the email was sent from. Therefore, such strong prior knowledge can provide us a good direction to predict the classification results.

One big difficulty we came though was the limited data size. Although we tried our best to label as many emails as possible, since manually labeling each email event takes about 1-2 minute and D2U database contains only 90 days emails with 803 events, those 803 events was used as our dataset.

Also, we found some labels are highly related. For example, if one event is classified into PERFORMANCE group, it is more likely to be classified into ART group too. Thus, one way we can improve our classifier is to explore the co-occurrence of the labels to boost the performance of multi-label classification.

[1]Bharath Sriram, David Fuhry, Engin Demir, Hakan Ferhatosmanoglu, Murat Demirbas. Short Text Classification in Twitter to Improve Information Filtering.

[2]Xia Hu, Nan Sun, Chao Zhang, Tat-Seng Chua. Exploiting Internal and External Semantics for the Clustering of Short Texts Using World Knowledge.

[3]Sarah Zelikovitz. Transductive LSI for Short Text Classification Problems.

[4]Barbara Rosario. Latent Semantic Indexing: An overview.

[5]Deerwester, Dumais, Furnas, Lanouauer, and Harshman. Indexing by latent semantic analysis.

[6]David M. Blei, Andrew Y. Ng, Michael I. Jordan. Latent Dirichlet Allocation.