**Rendering Algorithms FA19 // Final Project Dario Seyb**

# Project Goals and Inspiration

During a previous research project I came across a [paper](https://iopscience.iop.org/article/10.1088/0264-9381/32/6/065001) related to the movie Interstellar in which the authors describe how they approached rendering the black hole.

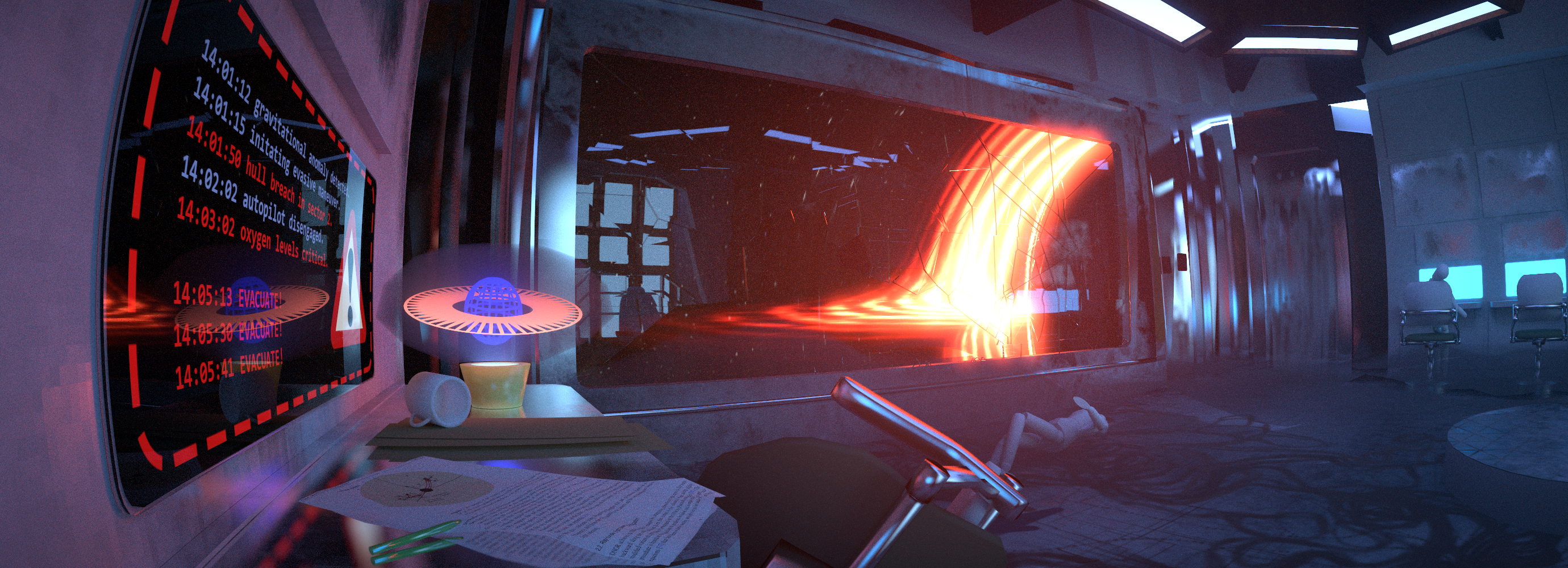

The theme "The Outer Limits", seemed to fit very well with rendering black holes and I've been wanting to explore that paper for a while.

Additionally, I decided to add a bridge scene, inspired by Star Trek, to frame the black hole.

This allowed me to tell a story as well.

# Major Features

## Black hole Rendering

To render a black hole we start with an undistorted environment map of the night sky.

Due to the immense gravitational forces around the black hole light is bend, resulting in the characteristic strong "gravitational lensing" effect.

The math required to calculate the bent the light rays goes deep into the territory of general relativity.

Luckily, the paper mentioned earlier lays out the calculations nicely, making it fairly straightforward to implement.

My implementation can be found in `blackhole.h`.

Another prominent features of many black holes is the accretion disk.

This is a cloud of hot matter which is orbiting the black hole.

As it gets sucked in closer by the gravitational pull it starts to heat up more, emitting bright light.

](accretion.png)

I decided to model this disk by hand instead of relying on simulation. This made it easy for me to control the appearance.

Below you can see the final environment created using the black hole renderer I implemented for this project.

## Spectral Rendering

While I did not end up using it in the final scene, I implemented spectral rendering after PBRT.

Spectra are represented using the `SampledSpectrum` class introduced in the textbook.

I extended the renderer to sample a wavelength for each ray. This results in color noise in unconverged images.

During the process of implementing this, I found and reported a bug in the online edition of PBRT which is now fixed by the authors.

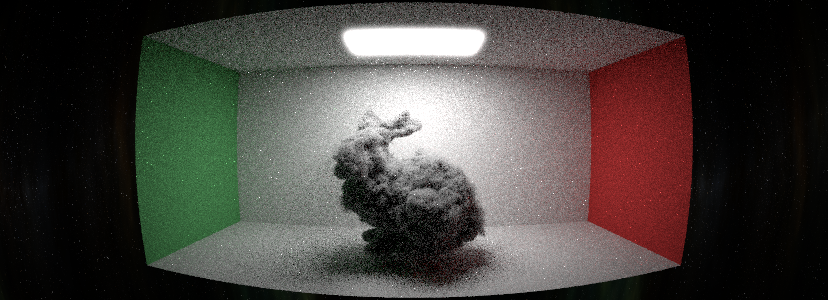

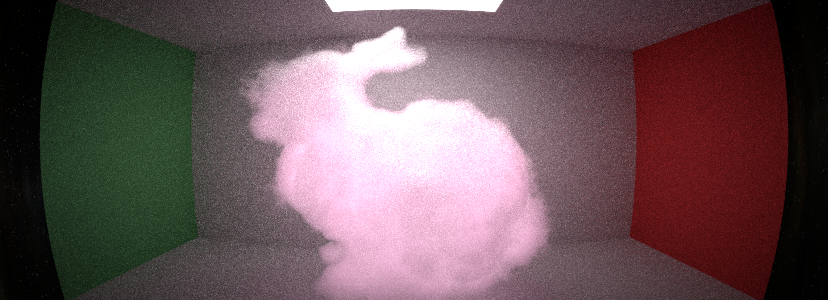

## Volumetric Pathtracing

I have implmented simple volumetric path tracing after Peter Shirley.

The implementation supports heterogeneous media via delta tracking and can read OpenVDB files such as the bunny shown below.

Both a simple isotropic phase function as well as the Henyey-Greenstein are supported.

# Minor Features

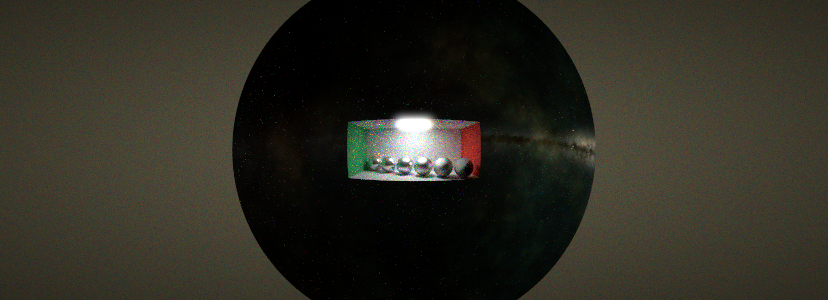

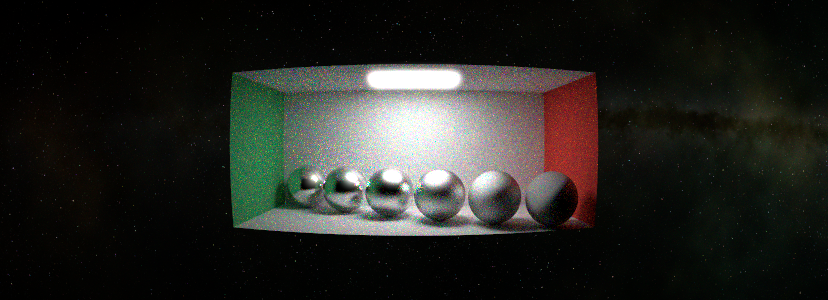

## Fisheye Lense Model

To be able to frame the final scene in the way that I wanted, I needed a very wide angle camera.

The projection we have been using breaks down at wider angles and introduces unsightly distortions.

Real wide angle lenses produce a "fisheye" view of the world, where edges along the sides of the frame get curved.

## Depth of Field

I have implmented the simple depth of field model described by Peter Shirley.

## Environment Sampling

I implemented simple environment map support, replacing the constant background color with a texture, but no important sampling.

## Postprocessing Effects

I have implemented multiple post processing effects. The implementations can be found [here](../include/dirt/postprocess.h).

(###) Bloom

Bloom is a popular effect particularly in real time games, but also in the film industry.

In fact, it was explicitly pointed out in the Interstellar paper that applying bloom was necessary to match the footage from the IMAX camera.

There are different physical effects that can cause bloom, but they all relate to light scattering inside the camera.

For example, the lenses might not be perfectly clear or clean. Even a thin film of grease on the lense will result in some of the light scattering diffusely.

One other source of bloom, also known as "halo" is light scattering from the back of the film plate.

This will cause a small usually red glow around bright parts of the image.

There are many ways to implement this effect. One could for example simulate light scattering inside the lense assembly.

A more practical approach is to apply an screenspace filter to the rendered image.

This boils down to convolving the image with some kernel, usually a gaussian, which is what I implemented.

(###) Vignette

Vignette is the term for darkening the corners of the image. This can happen when the response of the sensor includes a diffuse term.

(###) Monochrome

The monochrome effect converts the color image to black and white. This has no direct equivalent in real cameras but can provide some nice artistic control.

## Material Model

I planned to implement the Principled PBR material used by Blender but due to time constraint I had to settle for a simple blend material.

This is the one we implemented in class and I extract material parameters from the Blender scene.

It is energy conserving, but a proper microfacet model would be necessary to achieve more realistic results.

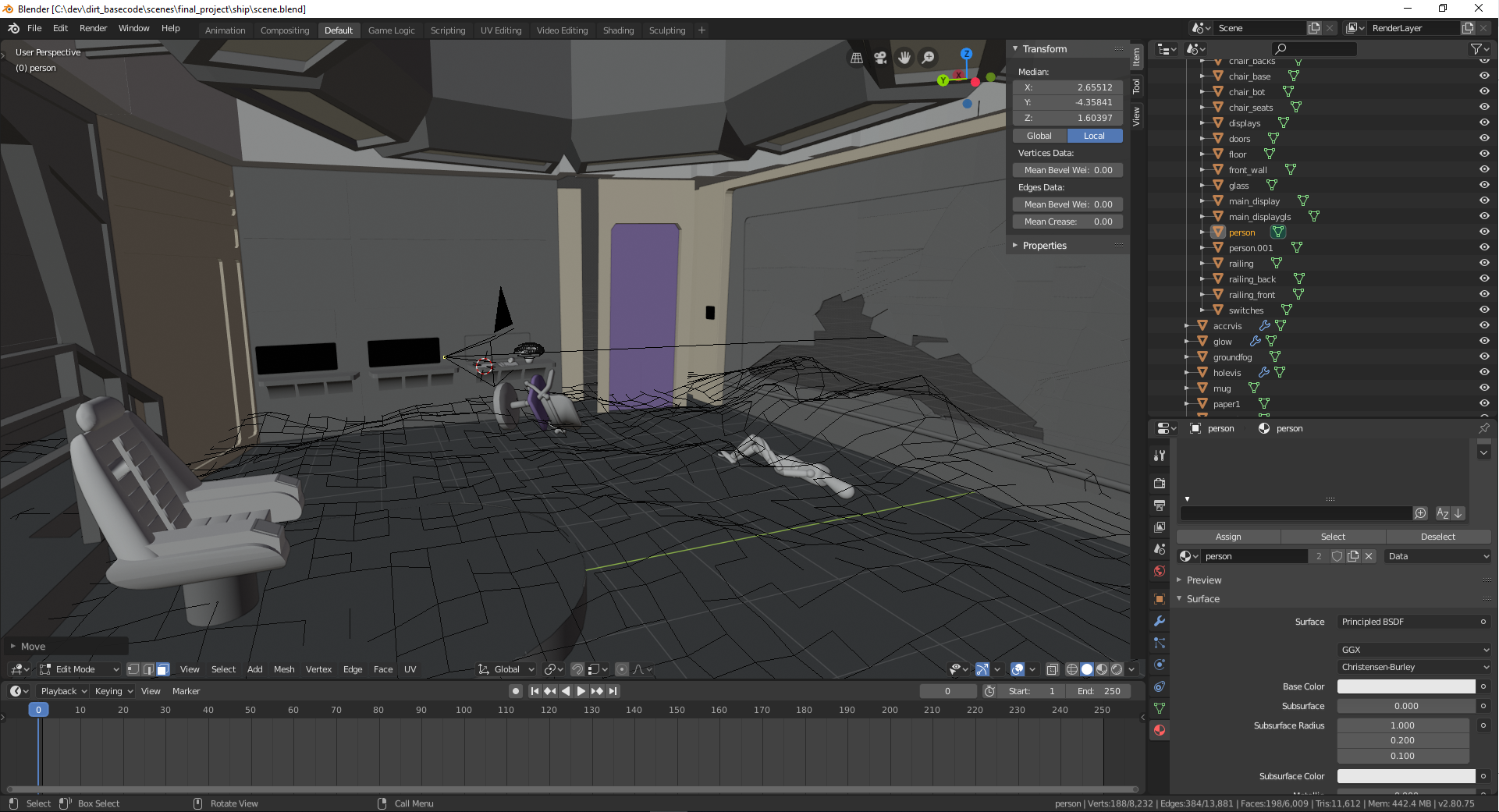

## Blender Exporter

I implemented a Blender plugin that supports exporting the the dirt scene format as well as running dirt and showing the result from inside Blender.

This was invaluable for iterations times, especially while I was doing the rough scene layout and camera positioning.

The exporter can be found [here](../tools/blender/operator_file_export_dirt.py).

It uses parts of the .obj exporter that ships with Blender to export individual models and converts materials to an approximate dirt equivalent.

For the final scene I specified some rendering parameters by hand instead of taking the values supplied by Blender.

# Final Image

# Used Resources

Additional resources are pointed out in code when relevant.

- [Gravitational lensing by spinning black holes in astrophysics, and in the movie Interstellar](https://iopscience.iop.org/article/10.1088/0264-9381/32/6/065001)

- [Shadertoy](https://shadertoy.com)

- [Physically Based Rendering: From Theory To Implementation](http://www.pbr-book.org/)

- [Kerr metric](https://en.wikipedia.org/wiki/Kerr_metric)

- [Innermost stable circular orbit](https://en.wikipedia.org/wiki/Innermost_stable_circular_orbit)

- [Integration of Ordinary Differential Equations](https://people.cs.clemson.edu/~dhouse/courses/817/papers/adaptive-h-c16-2.pdf)

- [Ray Tracing in One Weekend](https://www.realtimerendering.com/raytracing/Ray%20Tracing%20in%20a%20Weekend.pdf)

- [Ray Tracing: The Next Week](https://www.realtimerendering.com/raytracing/Ray%20Tracing_%20The%20Next%20Week.pdf)

- [Ray Tracing: The Rest of Your Life](https://www.realtimerendering.com/raytracing/Ray%20Tracing_%20the%20Rest%20of%20Your%20Life.pdf)

- [Star Trek: Sutherland Bridge](https://sketchfab.com/3d-models/star-trek-sutherland-bridge-7dcbb60473164f36a0a4f39df11b34c1)