In this lecture, we discuss Android's sensors and accessing them via the SensorManager.

Smartphones have a number of interesting sensors beyond the camera and the microphone, specifically:

In this lecture we will focus mainly on the accelerometer. The Sensor Manager provides access to the 3-axis accelerometer sensor. We use the signals (x, y, and z axis) to infer activity in MyRuns5; specifically, we build an accelerometer pipeline that extracts "features" from the signals and uses these features to infer the activity of the user. More on that in MyRuns5 lab.

The demo code used in this lecture include:

Sensor box for Android is a great app that allows you to quickly check out the sensors on your phone and interact with them. This is a very cool and geeky app.

We will use the ShakeSensorKotlin.zip app to demonstrate how to read the accelerometer. The starter code can be found here.

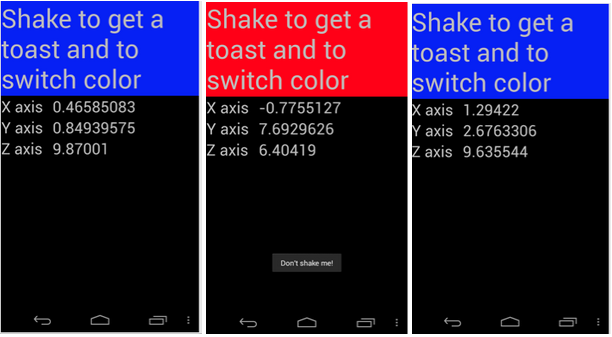

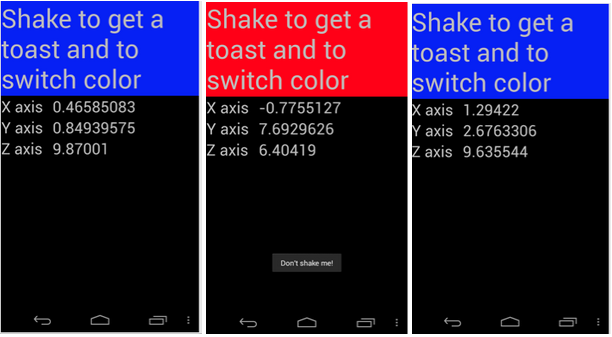

This simple demo displays the x, y and z axis accelerometer readings in a continuous fashion. If the phone is shaken the background color of the text changes, as shown in the example below. To do something cooler, we also let the phone to dial a number

We first declare that we want to implement the SensorEventListener interface in our activity. This requies overriding onSensorChanged(event: SensorEvent) and onAccuracyChanged(sensor: Sensor?, accuracy: Int). In this example, we only care about onSensorChanged so we will leave onAccuracyChanged empty (more on this later).

class MainActivity : AppCompatActivity(), SensorEventListener {...}

We then get a sensor service and sensor manager before we can access the accelerometer data.

sensorManager = getSystemService(SENSOR_SERVICE) as SensorManager

We set the sensor manager to get accelerometer data --

Sensor.TYPE_ACCELEROMETER. But we could have asked for any sensor data

that the phone produces -- light, proximity, etc. See the list of sensor types here.

We then register the sensor with the sensor object, listener (e.g.,

"this"), and specify how fast we want the system to stream the data to

us. Here we choose SENSOR_DELAY_NORMAL, which is a rate suitable for handling things like screen orientation changes

val sensor = sensorManager.getDefaultSensor(Sensor.TYPE_ACCELEROMETER)

sensorManager.registerListener(this, sensor, SensorManager.SENSOR_DELAY_NORMAL)

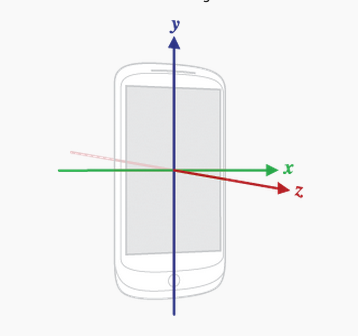

The figure below shows the x, y and z axis of the accelerometer sensor with respect to the phone's orientation.

See developers notes

on the definition of the coordination and the SensorEvent object

definition which is based to the onSensorChanged() callback when new

data is available.

The X axis is horizontal and points to the right, the Y axis is vertical and points up and the Z axis points towards the outside of the front face of the screen. In this system, coordinates behind the screen have negative Z values.

Inside onSensorChanged(), we get the sensor readings in x, y, and z directions. To put them under the same scale, we mornalize them with earth gravity.

override fun onSensorChanged(event: SensorEvent) {

if (event.sensor.type == Sensor.TYPE_ACCELEROMETER) {

x = (event.values[0] / SensorManager.GRAVITY_EARTH).toDouble()

y = (event.values[1] / SensorManager.GRAVITY_EARTH).toDouble()

z = (event.values[2] / SensorManager.GRAVITY_EARTH).toDouble()

xLabel.text = "X axis: $x"

yLabel.text = "Y axis: $y"

zLabel.text = "Z axis: $z"

checkShake()

}

}

The helper function checkShake()checks to see

if the phone has been shaken based on magnitude and frequency -- if so change the

background color of the text and dial a number.

private fun checkShake() {

val magnitude = Math.sqrt(x * x + y * y + z * z)

currentTime = System.currentTimeMillis()

if (magnitude > 3 && currentTime - lastTime > 300) {

titleLabel.setBackgroundColor(Color.RED)

val intent = Intent(Intent.ACTION_DIAL)

intent.data = Uri.parse("tel: 123456")

startActivity(intent)

lastTime = currentTime

}

}